Rethinking Privacy in the Age of AI

AI is rewriting the rules of identity - and not always for the better. At WebSummit Vancouver, Proof CEO Pat Kinsel delivered a pointed message: in the age of AI agents, businesses can’t afford to treat privacy and security as an afterthought.

The conversation covered hard truths about AI-driven fraud, real-world attack patterns, and the urgent need for identity-first systems. Here’s a breakdown of what matters most.

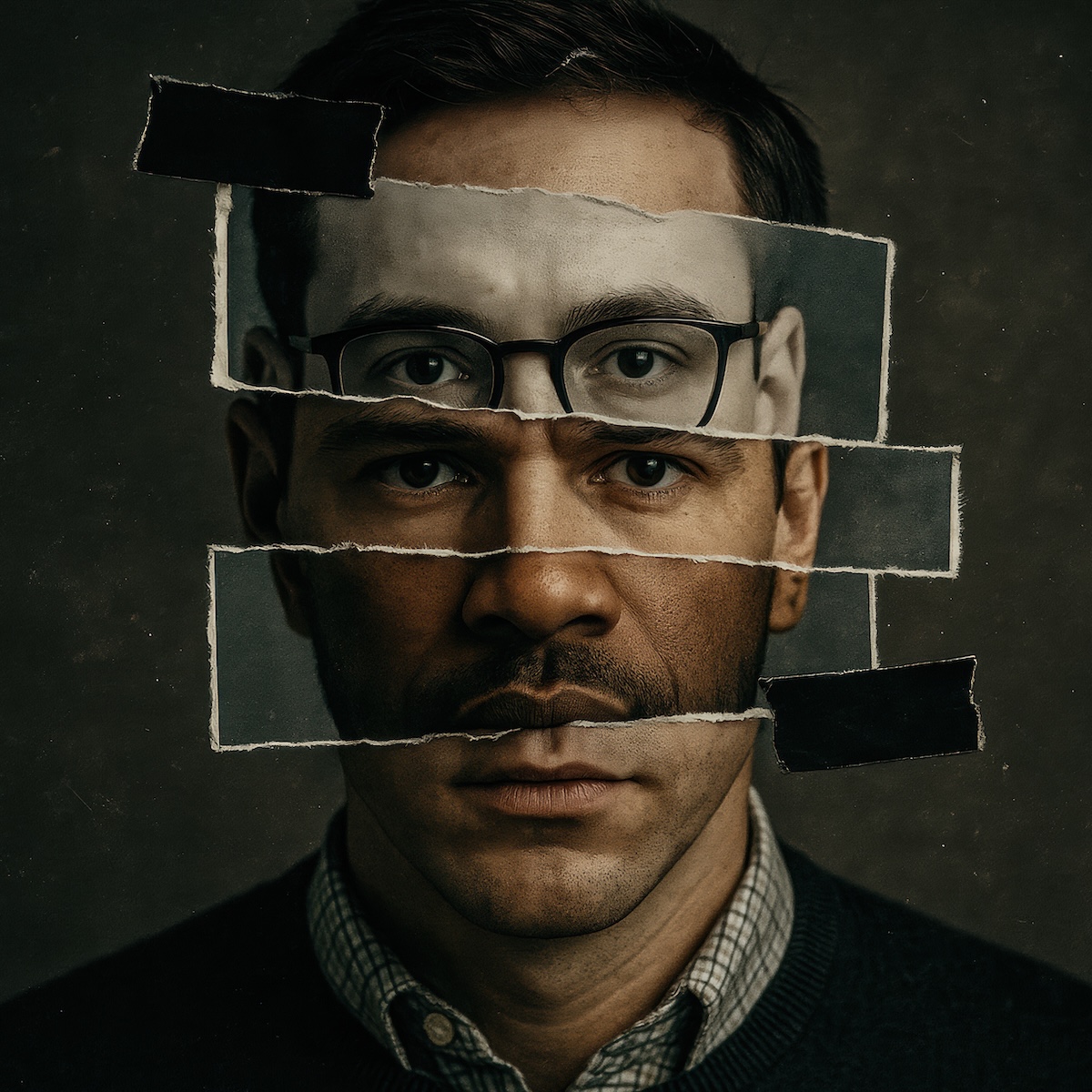

AI Fraud Moves Fast. Businesses Need to Move Faster.

AI now mimics human behavior with startling accuracy. Pat shared a story of a chatbot generating over 50,000 fraudulent identity applications in an automated attack that would’ve been unthinkable just a few years ago.

Bad actors are no longer just hackers behind screens. They're posing as your employees, applicants, even family members. Recent examples include:

- Fraudsters impersonating relatives to steal retirement funds and real estate.

- Fake job applicants exploiting hiring systems for payouts.

The message is clear: fraud is getting smarter. Security needs to get smarter too.

A Better Blueprint for AI Privacy

Pat laid out a vision for building privacy into the foundation of AI systems, not bolting it on afterward. His recommendations:

- Use cryptographic signatures and data permissions to verify actions and protect personal information.

- Model AI agents after real-world authority structures (like power of attorney) to ensure clear decision rights.

- Verify identity continuously, not just at onboarding.

The tools to protect data already exist. The challenge? Getting companies to use them, before they’re forced to.

Why “Move Fast, Break Things” Breaks Trust

Racing to ship AI products without securing them is a risk businesses can’t afford. Pat warned against the common “fraud is just a cost of doing business” mindset.

With AI, that cost is growing exponentially.

Instead, he urged companies to build fraud prevention into their product roadmap from day one - and to make collaboration between security, IT, and compliance teams non-negotiable.

We Can’t Rely on the Bad Guys to Play Fair

Some tools, like watermarked content (C2PA), help flag AI-generated material. But as Pat put it, “The Russians aren’t going to watermark content.” That’s why businesses need stronger frameworks:

- Use shared, general-purpose credentials to minimize the need for sensitive data collection.

- Engage users in fraud detection, just like credit card networks do.

- Push for smarter regulation, not just around global competition, but around workforce impact and personal privacy.

Pat’s call to action: We need to define what we value as a society before the default choices are made for us.

Let’s Secure the Future - Now

Pat ended with a challenge: AI isn’t the problem. Complacency is.

The security solutions are here. What’s needed now is urgency - and a commitment to identity protection that matches the speed of innovation. Watch the full session to hear more about how to protect your business and your customers in an AI-driven world. (Click here to watch on YouTube)

Want to see how Proof helps organizations verify identity, detect fraud, and build trust at scale? Explore Proof’s identity verification capabilities. And to see where we’ll be next, check out our event page.

.png)

.jpg)