Cracking the Identity Code: Traditional Fraud Controls Fail to Stop Scams

Fraud, Scams, and Broken Policy - Part 2 of 3:

Welcome back to Cracking the Identity Code!We’ve broken out our next installment, “Fraud, Scams, and Broken Policy,” into a three part series, where we help break down the current state of scams in the U.S. In Part 2, we take a closer look at how novel payment systems created novel fraud threats, from impersonation to first-party fraud, how securing account access is just not enough anymore, and discuss why banks need to think more strategically about the context behind every identity and every transaction.

Traditional Fraud Controls Fail to Stop Scams

Not long ago, fraud attacks mostly involved stolen cards or hacked accounts. But as banks and merchants fortified their systems, criminals shifted focus to the human element. Today’s fastest-growing fraud tactics center around deception, and involve increasingly sophisticated social engineering and impersonation attacks.

Remember the imposter scams from Part 1? The ones where someone poses as your bank, a government agency, a romantic partner, or even your CEO? They are now a leading type of fraud in the U.S., responsible for close to $3 billion in losses in 2024. These scams don’t involve stealing your passwords, they involve a scammer exploiting your trust, your biggest fears, your hopes and dreams.

Another rising fraud type in the U.S. are scams coming from customers themselves (or those posing as customers) gaming the system from within. First-party fraud – falsely disputing charges you actually made, or taking out loans with no intent to repay – has exploded. In fact, first-party fraud is now the leading type of fraud across the world, accounting for 36% of all reported fraud cases in 2024.

Both trends – scams that manipulate genuine users, and users who themselves commit fraud – expose the same truth: our current fraud controls are not built to handle fraud that blurs the line between victim and perpetrator.

Authentication Can’t Catch a Con

Financial institutions have poured billions of dollars into securing accounts. Sophisticated device fingerprints, biometric logins, and two-factor codes are well-designed to ensure that only the rightful customer can log into an account or approve a transaction. But, no matter the number of correct passwords, successful Face ID unlocks, or one-time codes, systems that were designed to secure access can’t interpret the intent behind a transaction. If a system sees a customer as “real,” transactions flow smoothly. As a result, social engineering scams often fly under the radar until it’s too late.

Our banking apps can confirm it’s us logging in, but they have little way of determining if we really know that the money we’re sending is actually to our electrician or directly to a scammer. By the time the fraud is discovered (usually when we call our banks in a panic hours or days later), the money has vanished.. It’s a frustrating irony – banks have never been better at keeping the wrong people out of your account, yet have little protections they can offer when it's you who lets the scammer in.

There’s also a blind spot on the receiving side of transactions. When you authorize a payment, banks struggle to verify what seem like simple things, like the name you entered for the payee matches the actual account holder’s name. In the UK, banks implemented Confirmation of Payee checks to address this, alerting customers if a payee’s name doesn’t match. Newer payment technologies like Zelle, Venmo, Square Cash have implemented similar approaches, but in a majority of payments, these measures have yet to gain widespread adoption in the U.S. (note: name matching used to require pages of code, but with AI, one would think every payment system on earth has the power to do so). Even for these newer payment technologies, scammers navigate this by directing payments to bank accounts they control (often opened under fake or stolen identities). When these payment companies confirm a scam, they create negative lists (keyed off of cell numbers, emails, social security numbers, etc) to limit future damage. The issue is that all of those “keys” have vulnerabilities as they can be manufactured to facilitate a scam (even SSNs). Those vulnerabilities are exposed because someone’s true identity is not tethered to the manufactured key.

Evolving Scams Outsmart Static Defenses

Traditional fraud filters which are good at catching unauthorized access,say, blocking a login from an unusual location, are far less adept at sensing the subtle signs of a con in progress. The “red flags” in an authorized scam are more contextual: the timing, the behavior, the communication surrounding the transaction. For instance, a customer suddenly emptying their account via multiple rapid transfers might be a sign of distress or coercion that a basic rule-based system could miss. To truly thwart scams, banks need to deploy more nuanced, real-time behavioral analytics that can detect when a customer’s pattern suggests something is off.

Consider a few scenarios that might warrant step-up monitoring:

- Unprecedented new payee or destination: A large transfer to a brand-new payee or an account in a foreign country that the customer has never transacted with before.

- Unusual timing and frequency: A flurry of transactions or a very large payment made outside the customer’s normal routine (e.g. late at night, or multiple transfers in minutes).

- Signals of communication or urgency: Transactions that occur immediately after the customer receives a phone call or message, especially if the customer is actively on a call during the banking session (a sign they might be literally being coached by a scammer in real time).

Identifying these red flags is technically tricky, but not impossible – and it’s where a lot of fraud prevention is headed. Some banks have started to integrate “session behavior” analysis (like noticing if a user copies and pastes a number—possibly from a scam message—or if the user is toggling rapidly between apps during a transaction, which might indicate they're following instructions). The key is context: understanding not just the transaction, but the situation around it. A rule that says “block all $5,000 transfers” would be overkill; but a system that says “pause and verify this $5,000 transfer because it’s the first-ever payment to a new overseas account right after the customer got an inbound call” will impede scams. The issue remains, if someone is being scammed, they have an element of emotion attached. Even designed roadblocks will be dismissed if someone is sending money because they HAVE to. This is why institutions, payment systems, and vendors must focus on the true identity of the receiver.

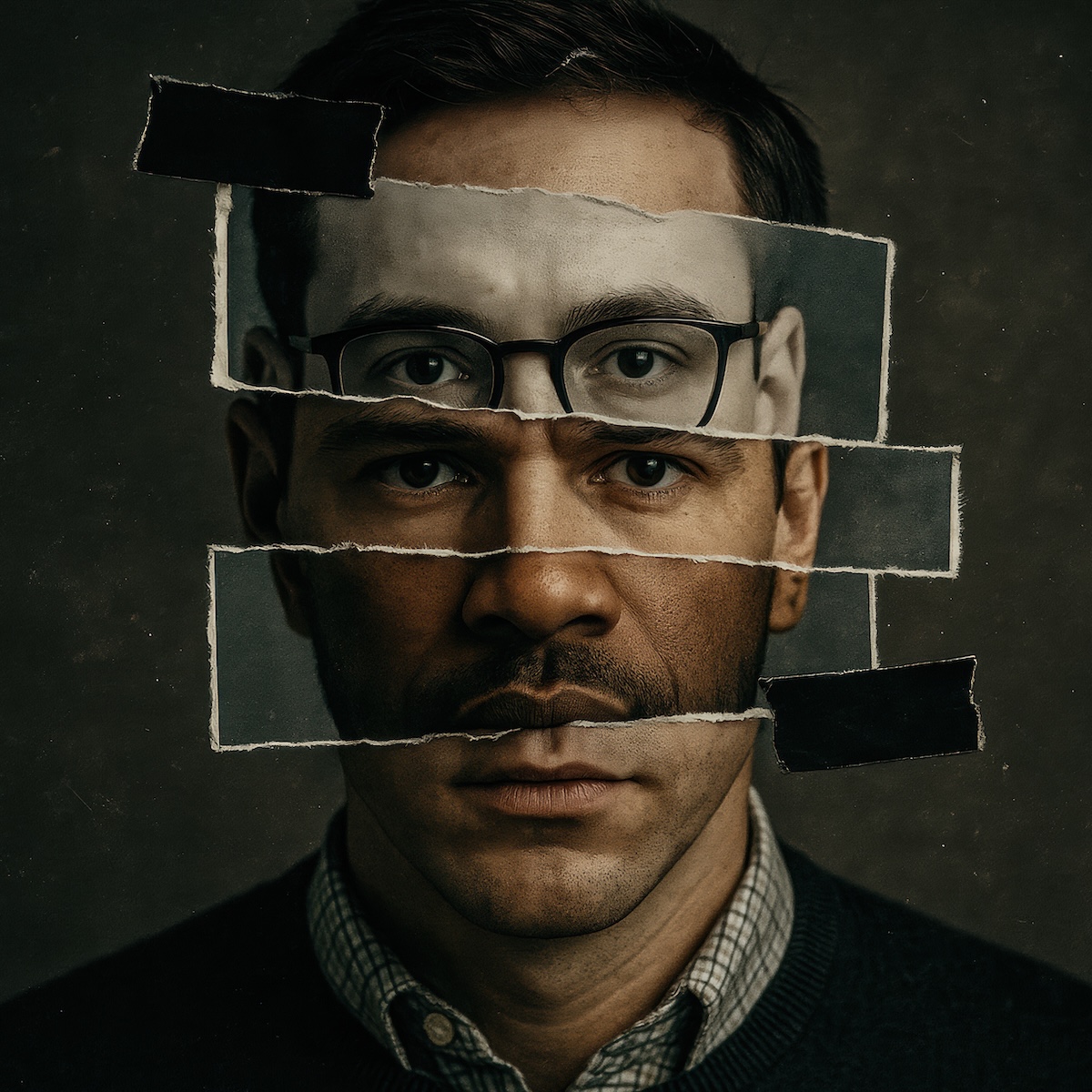

Another new threat vector is the rise of AI-powered scams. Artificial intelligence is turbocharging social engineering by making impersonation easier and more convincing. Fraudsters can now clone voices and create deepfake videos with startling accuracy. Imagine getting a call that sounds exactly like your panicked spouse or child, pleading for money – today’s AI tools are fully capable of making that a reality. Side note, my wife, daughters, and I have a safe word to verify if we get one of these calls. It makes me laugh knowing what it is, so good luck to AI to figure it out! .

Scammers have used AI voice cloning to pull off “vishing” heists, where victims truly believe their loved one is in danger on the phone. AI can also churn out limitless personalized phishing emails or texts that are grammatically perfect and contextually tailored, making scam messages harder to distinguish from legitimate communication. In short, the bad guys are embracing every tech tool available to make their deceptions more believable. This puts even more pressure on institutions to verify and confirm intent before money leaves a customer’s account.

In Part 3 - the final in this series - we’ll propose a shift from one-off authentication to a persistent identity model purpose built to tie identity to transactions, to fight scams and other fraud associated with first party bad actors.

.png)

.jpg)